BigFlow

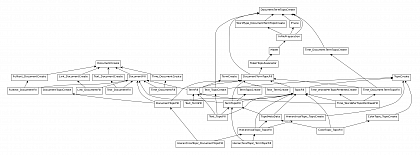

BigFlow is a data-driven workflow approach to mediate between data preparation, machine learning and interactive, visual data exploration in big data applications. It consist of three components: a lightweight Java library for data-driven workflows, a modeling framework that combines entity-relationship models with graphical models from machine learning and an abstract data manipulation language to incrementally build complex data transformation operations.

Machine learning models are applicable to many different domains. E.g. topic models---a probabilistic graphical model---can be applied to documents, bioinformatics data, or, images and videos. However, each domain needs special data preparation. Further, after learning, the results from machine learning need to be translated back into the context of application data in order to be analyzed, interpreted and evaluated by domain experts.

There are gaps between, data preparation and machine learning as well as between machine learning and interactive, visual data exploration. State-of-the-art is close this gaps with scripts containing glue code that transforms the output of one step into the input of the next one. BigFlow offers to use instead a lightweight Java library for data-driven workflows, called command manager. It structures preprocessing and analysis pipelines into simple commands that communicates thought an context object. The library supports online generation of workflows based on dependencies of the commands as well as nested workflows. This allows easy reuse of software components as well as adaptation of functionality without writing code just by changing configurations.